Mastering Data Normalization in Machine Learning: A Complete Guide

We are in the process of releasing the third phase of our product, and we’re thrilled to unveil advanced features for data integration. Among these, data normalization stands out as a core element. To help our audience grasp its significance, we’ll explore what data normalization entails, why it’s essential, and the specific algorithms we’ve integrated into our platform. This post kicks off a series where we’ll dive into the various components of data integration in machine learning.

We are in the process of releasing the third phase of our product, and we’re thrilled to unveil advanced features for data integration. Among these, data normalization stands out as a core element. To help our audience grasp its significance, we’ll explore what data normalization entails, why it’s essential, and the specific algorithms we’ve integrated into our platform. This post kicks off a series where we’ll dive into the various components of data integration in machine learning.

Data Normalization Definition

Data normalization involves scaling data to a defined range or distribution, ensuring that all features contribute equally to a machine learning model. Raw data often contains features with inconsistent ranges and scales, which can negatively impact model performance. Normalization mitigates these issues, enabling algorithms to process data efficiently and yield more accurate results.

For example, if one dataset feature represents monetary values and another represents percentages, the varying scales can skew the model’s focus. Normalization eliminates this imbalance, enhancing both performance and stability in machine learning workflows.

Why Does Data Normalization Matter?

1. Enhances Model Accuracy

Scaling features to similar ranges allows algorithms to train more effectively and generate reliable predictions.

2. Reduces Feature Bias

Features with larger ranges no longer overshadow those with smaller ones, promoting balanced input for the model.

3. Optimizes Algorithm Efficiency

Models like gradient descent require well-scaled data to function effectively, making normalization a crucial preprocessing step.

Key Algorithms for Data Normalization

Our platform incorporates four major normalization techniques, each tailored to specific scenarios:

1. StandardScaler

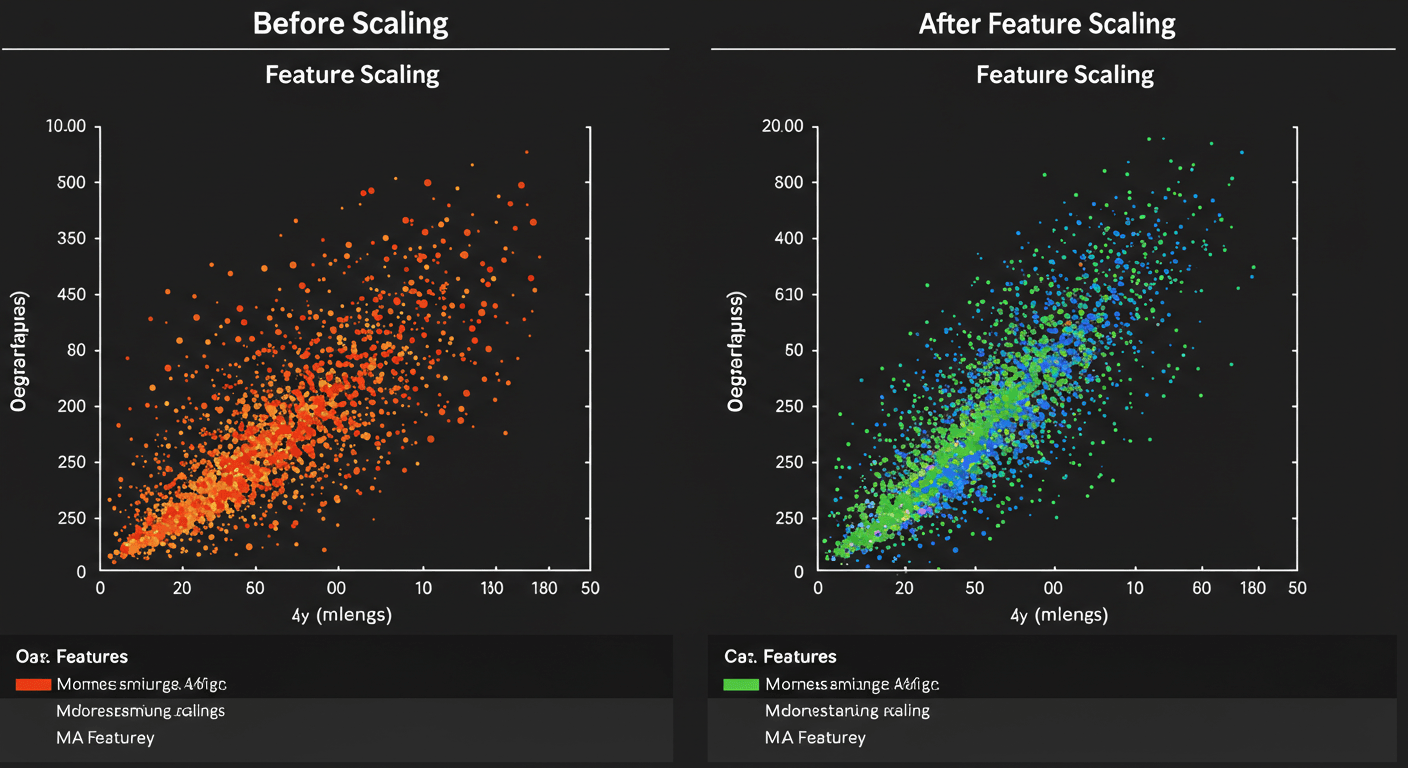

StandardScaler transforms data by centering it around zero and scaling it based on standard deviation. It works best for datasets with a Gaussian (normal) distribution.

2. MinMaxScaler

MinMaxScaler rescales data to a defined range, typically [0, 1], preserving relationships between data points. This method is suitable for datasets with clear minimum and maximum values.

3. RobustScaler

By leveraging the median and interquartile range, RobustScaler effectively handles datasets with outliers. It’s ideal for scenarios where data distribution is skewed.

4. MaxAbsScaler

This scaler adjusts data by dividing each value by the maximum absolute value of the feature, retaining the sparsity of the dataset. It is particularly effective for sparse data, such as one-hot encoded features.

The Bigger Picture: Normalization in Data Integration

Data normalization is one of many crucial steps in integrating datasets from diverse sources. It ensures compatibility for downstream processes such as feature selection, data fusion, and machine learning model training.

In upcoming posts, we’ll dive deeper into other aspects of data integration, such as addressing missing data, feature engineering, and combining datasets to achieve seamless workflows.

Final Thoughts

Data normalization plays a pivotal role in preparing data for machine learning. Its inclusion in our latest release reflects our commitment to providing tools that simplify workflows and improve outcomes. By leveraging algorithms like StandardScaler, MinMaxScaler, RobustScaler, and MaxAbsScaler, we aim to empower users to build highly efficient and accurate models.

Stay tuned as we continue to explore more about data integration and share valuable insights to elevate your machine learning projects!